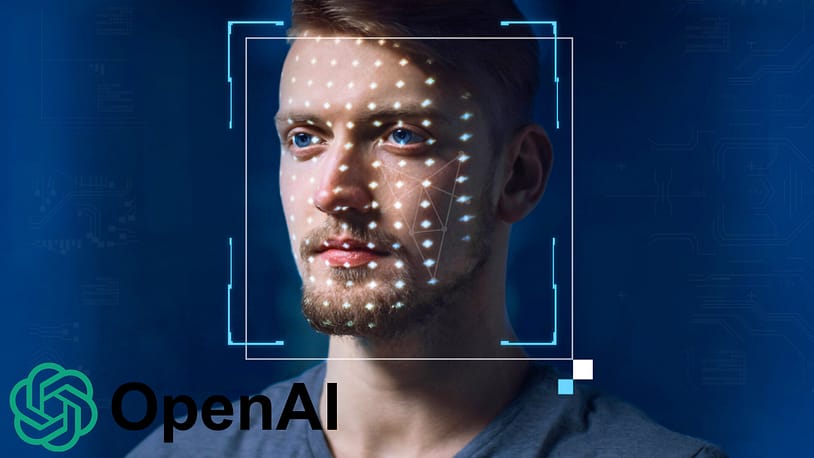

OpenAI, the top dog in generative AI, has revealed its new deepfake image detector tool, distinguishing AI-generated images to combat the ongoing challenge of misleading content.

Quick Takes:

- OpenAI unveils its deepfake image detector to revolutionize online content verification.

- The main focus of the tool is to combat misleading content, distinguishing AI-generated images from pictures taken by humans.

- Adobe, Microsoft, and DeepMedia are also battling against deepfakes through similar initiatives.

The announcement of OpenAI’s deepfake image detector — first revealed during the Wall Street Journal’s Tech Live conference in Laguna Beach, California — has garnered a significant amount of attention.

Telling AI-generated images apart from images created by humans can be a challenge, often requiring human judgment. So, this is where OpenAI’s forthcoming tool comes in, aiming to offer a robust defense against the growth of misleading deepfakes through a tool that’s 99% reliable.

Notably, OpenAI also revealed plans to implement stricter content guidelines to strengthen its defenses and strategies against harmful content.

Other Tech Giants Battling Deepfakes

The battle against deepfakes isn’t only OpenAI’s mission; other entities, including Adobe, Microsoft, and DeepMedia, are also crafting detection mechanisms. Adobe, in particular, recently revealed its “AI watermarking” system, adding a symbol to AI-generated images to showcase its origin. Still, this system needs to be foolproof; the metadata is removable.

Other similar tools have also been outwitted. However, if these newer versions are a success and invisible, it would mark a significant advancement in the online world, pioneering the way in which digital media is perceived and interacted with.